Lecture 2: Fundamentals of Endpoint Encryption in Network Information Security

Basic Theoretical Foundations of Endpoint Encryption

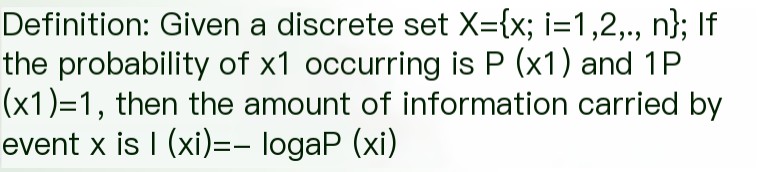

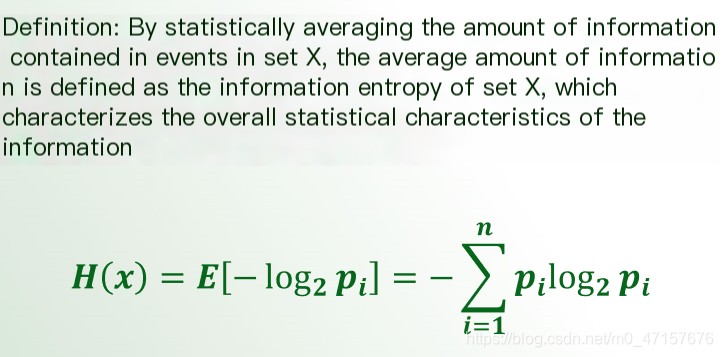

Basic Knowledge of Information Entropy in Endpoint Encryption

- Information entropy is a measure of the “disorder” and “uncertainty” of an information state (essentially, entropy is not a measure of information, but the entropy decreases as the amount of information increases, and it can be used to measure the gain in information).

>

>

Typically, when \( a=2 \), the unit of information is the bit. Shannon defines the mathematical expectation of information as entropy. The concept of Endpoint Encryption plays a crucial role in protecting information and maintaining the confidentiality and integrity of data transmitted or stored on endpoints, reducing risks associated with unauthorized access to sensitive information.

>

>

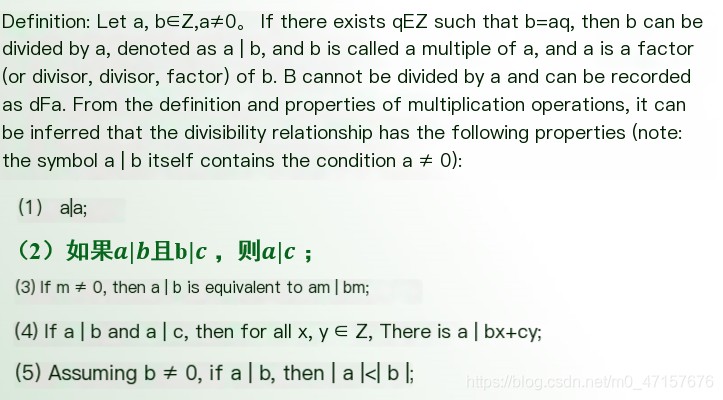

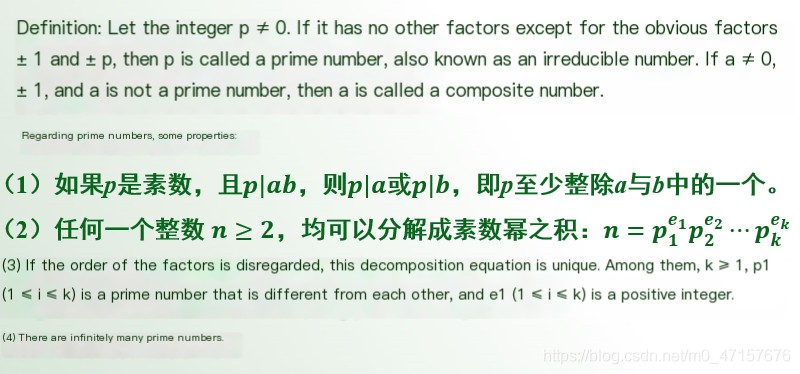

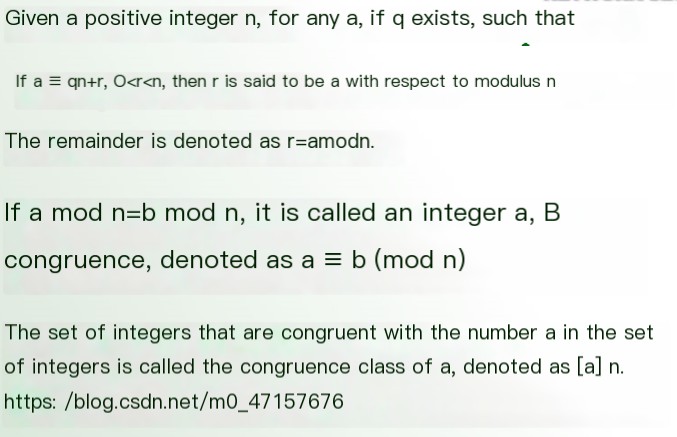

2. Basic Terminology in Number Theory

- Divisibility

- Prime Number

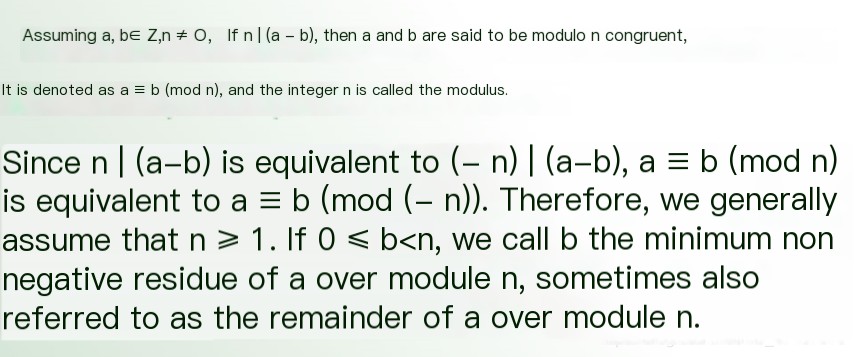

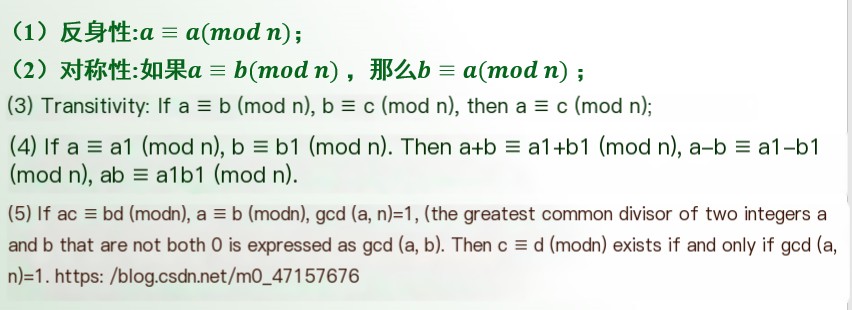

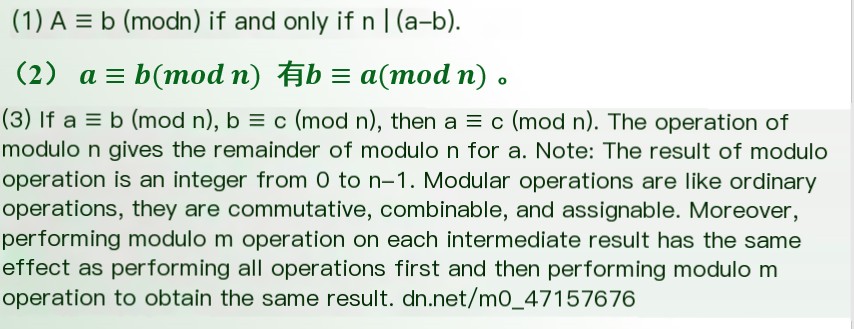

- Congruence

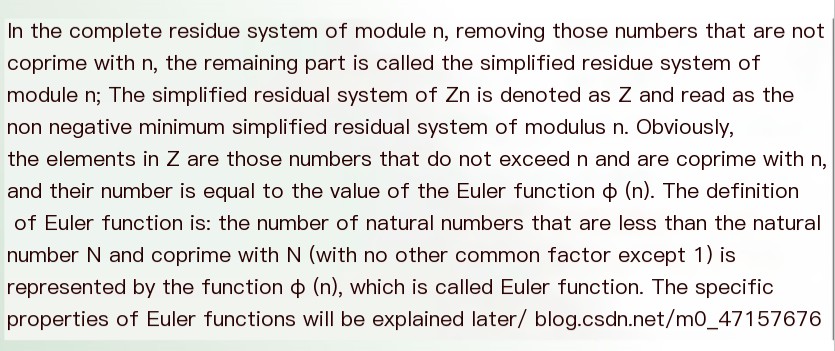

- Reduced Residue System

- Modular Arithmetic

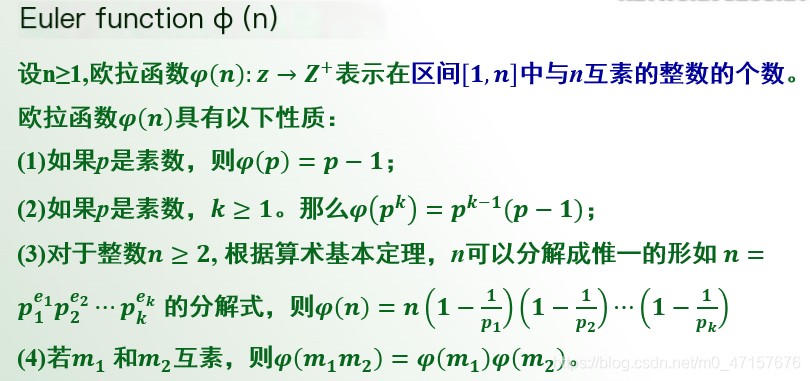

- Euler’s Totient Function

3. Basic Knowledge of Algorithm Complexity

- NP Problems: Problems that can be solved in polynomial time on a deterministic Turing machine are called tractable problems. The set of tractable problems is called the class of Deterministic Polynomial-time Solvable Problems, denoted as the P class. Problems that can be solved in polynomial time on a non-deterministic Turing machine are called Non-deterministic Polynomial-time Solvable Problems, denoted as NP problems. The set of NP problems is known as the Non-deterministic Polynomial-time Solvable Class, denoted as the NP class. Clearly, P⊆NP because any problem solvable in polynomial time on a deterministic Turing machine is also solvable in polynomial time on a non-deterministic Turing machine.

- NPC Problems: There is a class of problems within the NP class known as NP-complete problems, denoted as NPC. All NP problems can be transformed into problems in NPC in polynomial time. NPC is the most difficult class of problems within the NP class, but the problems in NPC are of similar difficulty and can be transformed into the NPC problem known as the satisfiability problem. If any of these problems belongs to P, then all NP problems belong to P, and P=NP. The security of current cryptography algorithms is based on NPC problems; decrypting a cipher is equivalent to solving an NPC problem. If P=NP, decrypting ciphers would be easy.

- Key refers to a randomly selected string according to a cryptographic system used by the user, which serves as the unique parameter controlling the transformation of plaintext (encryption) and ciphertext (decryption). The collection of all keys is called the key space. Generally, the larger the key, the stronger the encryption.

4. Components of an Endpoint Encryption System

- An encryption system is essentially the mapping from plaintext to ciphertext carried out by a particular encryption algorithm under the control of a key. It includes at least four components: (1) The encrypted message, also known as plaintext; (2) The encrypted message after encryption, also known as ciphertext; (3) Encryption and decryption devices or algorithms; (4) The key for encryption and decryption. Generally, the key is represented by K, plaintext by m, the encryption algorithm by EK1, and the decryption algorithm by DK2; Thus, DK2(EK1(m)) = m

- Any encryption system must essentially adhere to four security principles

- Confidentiality: The encryption system provides a secret key or a series of secret keys during the transmission of information to translate information into ciphertext through cryptographic operations, ensuring that unintended parties cannot read the information. Only the sender and receiver should know the content of the information.

- Integrity: The data should not be disrupted during transmission, and the data received at the destination should be consistent with the data from the source. Strong passwords and encryption keys should be selected to ensure that an intruder cannot compromise the keys or find an equivalent encryption algorithm, preventing the intruder from altering the data and re-encrypting it. Sometimes, data integrity can be detected using appropriate methods before the information is fully modified. For example, cryptographic hash functions that are one-way hashes produce a unique “fingerprint” for plaintext. When plaintext is intercepted and read, modifying it alters the hash, making it easy for the intended recipient to notice differences between the hashes.

- Authentication: The encryption system should provide digital signature techniques to enable the user receiving the information to verify who sent it and determine if the information has been tampered with by a third party. As long as the key has not been leaked or shared with others, the sender cannot repudiate the data they sent. In practice, if both sender and receiver use a symmetric key, for overall information encryption or link-level encryption in computer networks, an encryption session can be established between two routers to send encrypted information across the Internet. Computers connected to the network send plaintext to the router, which converts it into ciphertext and sends it across the Internet to the router at the other end. Throughout the formation and transmission of encrypted data, whether inside or outside the encryption side of the network, and at any node not encrypting, information can be intercepted and analyzed. However, for the recipient node, it can only determine whether the information came from a specific network, making the authentication mechanism quite complex.

- Non-repudiation: Beyond verifying who sent the information, the encryption system should further verify whether the received information is from a trusted source, essentially through necessary authentication confirming the trustworthiness of the sender. Modern robust authentication methods use encryption algorithms to compare known segments of information, like a PIN (Personal Identification Number), to assess the source’s trustworthiness.

2. Methods of Endpoint Encryption

“Classification of Endpoint Encryption Methods”

- Classified by Key Method:

- Symmetric Encryption: Both the sender and receiver use the same key. The same key is used for both encryption and decryption. The encryption and decryption algorithms in symmetric encryption are the same, with both using the same key K.

- Asymmetric Encryption: Also known as public key encryption, using different keys for encryption and decryption. It typically involves two keys, termed the “public key” and the “private key”. They must be used in pairs, or decryption cannot occur. The “public key” refers to the key that can be published openly, while the “private key” is not shared with others, known only to the holder. In asymmetric encryption, encryption and decryption algorithms differ; K1 is the encryption key, publicly known, called the public key, K2 is the decryption key, and should be kept secret.

- Classified by Secrecy Level

- Theoretically Secure Encryption: Regardless of how much ciphertext is obtained or the computational capacity available, a unique solution for the plaintext cannot be determined. For example, using an objectively random one-time pad falls under this encryption method.

- Practically Secure Encryption: From a theoretical perspective, it can be broken, but under current objective conditions, a unique solution cannot be determined through computation.

- Classified by Plaintext Form

- Analog Information Encryption: Used to encrypt analog information. For example, encryption of continuously varying voice signals within a dynamic range.

- Digital Information Encryption: Used to encrypt digital information. For example, encryption of telegraphic information constituting a binary relation of 0 and 1 with two discrete levels.

2. Digital Signature and Endpoint Encryption

- Definition: A digital signature is the process of attaching encrypted information to original information, a type of identity authentication technology that supports the authentication and non-repudiation of encryption systems; the signer is responsible for the content of the original information and cannot deny it.

- Process: When sending a message, the sender uses a hash function to generate a message digest from the message text and then encrypts this digest with their private key. This encrypted digest serves as the digital signature of the message, and both the signature and the message are sent to the receiver. The receiver first calculates the message digest from the received original message using the same hash function as the sender and then uses the sender’s public key to decrypt the digital signature attached to the message. If the two digests match, the receiver can confirm that the digital signature belongs to the sender.

3. Network Information using Endpoint Encryption

- The purpose of network information encryption is to protect data, files, passwords, and control information within a network, as well as data transmitted over the network. Common methods of network encryption include link encryption and endpoint encryption.

“1. Endpoint Encryption: Link Encryption”

- Link encryption protects the link-layer data units by encrypting them, aiming to secure link information between network nodes. This encryption not only encrypts data packets transmitted between nodes but also encrypts routing information, checksums, and control information, including frame headers, padding bits, and control sequences at the data link layer. When the ciphertext is transmitted to a node, it is completely decrypted to obtain link information and plaintext and then completely encrypted and sent to the next node. The design of encryption equipment of this kind is relatively complex, requiring an understanding of the link layer protocol and necessary protocol conversion.

- Advantages and Disadvantages

- Advantages:

Link encryption is highly effective because almost any useful message is protected by encryption. The scope of encryption includes user data, routing information, and protocol information, among other things. Therefore, attackers will not know the identities of the communication senders and receivers, the content of the messages, or even the length of messages and their communication duration. Furthermore, the security of the system does not depend on any transmission management technology. Key management is also relatively simple, requiring only a shared key at each end of the line. The ends of the line can independently replace the key, separate from the rest of the network.

- Disadvantages:

Encryption protection is needed for each segment of the connection. For larger networks comprising sub-networks of different architectures, the overhead related to encryption devices, policy management, and the amount of key material is enormous. Additionally, at each encryption node, there exists an encryption vacant segment: plaintext information, which is extremely dangerous, especially for networks spanning different security domains. Later, to address the drawback of nodes containing plaintext data, additional encryption devices were added within nodes, eliminating plaintext within nodes. This type of encryption, like link-to-link encryption, relies on cooperation with public network node resources, and the overhead remains substantial.

2. Endpoint Encryption

Endpoint encryption provides encryption protection for data from the source user to the destination user, placing the encryption module above the network layer. In endpoint encryption, data remains in ciphertext form from the encrypted endpoint all the way to the corresponding decryption endpoint throughout the transmission, overcoming the problem of encryption vacant segments (intermediate node plaintext information) found in link encryption. Since encryption and decryption happen only at two endpoints, the intermediate nodes are transparent. This significantly reduces the overhead of installation devices (especially at intermediate nodes) and the inconvenience caused by complex policy management and key management. Given that encryption often focuses on higher-layer protocol data, it is easy to provide QoS services for different traffic, achieving encryption based on particular traffic flows and encryption of varying strengths. This is conducive to improving system effectiveness and optimizing system performance.

The disadvantage of endpoint encryption is: Due to the complexity of communication environments, successfully completing key establishment between two endpoint users across the network requires a performance cost. Secondly, endpoint encryption cannot protect certain information during data transmission, such as routing information and protocol information. A knowledgeable attacker can leverage this information to launch traffic analysis attacks. Furthermore, implementing endpoint encryption devices (modules) is complicated, requiring devices to understand the protocol layer providing services and successfully invoke these services, processing the corresponding data on the device and transferring the processed data to the upper protocol layer. If the encryption device cannot provide a suitable service interface for the upper protocol layer, significant impacts on communication performance will occur.

4. Data Encryption Standards

1. Symmetric Key Encryption DES

- DES employs principally substitution and permutation in its encryption method, using a 56-bit key to encrypt 64-bit binary data blocks (the key length is 64 bits, with 8 bits for parity check, making the effective length 56 bits). Each encryption operation can encode 64-bit inputs with 16 rounds, and through a series of substitutions and permutations, the original 64-bit input data is transformed into completely different 64-bit output data. The DES algorithm offers quick computations and convenient key generation, suitable for software use on most current computers and implementation on specialized chips. However, DES keys are too short (56 bits), lacking key robustness, which diminishes confidentiality strength. Moreover, the security of DES depends entirely on the protection of the key. When used in network environments, the key distribution channel must provide strong reliability to ensure confidentiality and integrity.

- Additionally, there are several variations of the DES algorithm, such as Triple DES and Generalized DES. At present, application areas for DES mainly include: data protection in computer network communications (limited to civilian sensitive information); encryption of electronic funds transfers; safeguarding user-stored files to prevent unauthorized surveillance; computer user identification, etc.

2. Clipper Encryption Chip Standard

- This data encryption standard provides users with only an encryption chip (Clipper) and hardware device, without disclosing the cipher algorithm. The key capacity is over 10 million times that of DES, formally used as the new commercial data encryption standard by the NSA (National Security Agency) in 1993, aiming to replace DES, enhance the security of cipher algorithms, and primarily protect communication exchange systems like telephone, fax, and computer communication information. To ensure more reliable security, production methods of encryption equipment follow stringent regulations, with the Clipper chip produced in its entirety by one company and programmed by another according to specified protocols.

- The main feature of the Clipper chip is that it takes full advantage of high-performance equipment resources to increase key capacity, thus enabling encryption of information over computer communication networks, such as in government and military communication networks. Research into data encryption chips in these networks is continually updated, leading to the realization of standards such as digital signature standards and secure hash function standards, as well as algorithms for generating random data using pure noise sources.

3. International Data Encryption Standard

- This algorithm is an evolution based on the DES algorithm. Like DES, the International Data Encryption Algorithm (IDEA) is also designed for data block encryption. It employs a 128-bit key, designing a series of encryption rounds; each round utilizes a subkey generated from the complete encryption key. Whether implemented in software or hardware, this algorithm is extremely fast, very well-suited for rapid encryption of substantial amounts of plaintext information. It was officially published in 1990 and has been enhanced since then.

4. Drawbacks of Traditional Encryption Methods

In network communications, traditional symmetric encryption methods involve the sender encrypting and the recipient decrypting with the same key. Although these methods offer fast computation, as the number of users increases, the massive key distribution becomes a challenging issue. For instance, if there are n users in the system, and each pair requires secure communication, each user must possess (n-1) keys, and the total number of keys in the system would be n(n-1)/2. For 10 users, each must have 9 keys, and there are 45 keys total in the system. For 100 users, each must have 99 keys, totaling to 4950 keys in the system. This consideration only applies when communication between users uses a single session key. The generation, management, and distribution of such vast numbers of keys indeed pose a difficult problem. Consequently, the poor key robustness and complexity of key management brought about by symmetric encryption methods have limited their development.

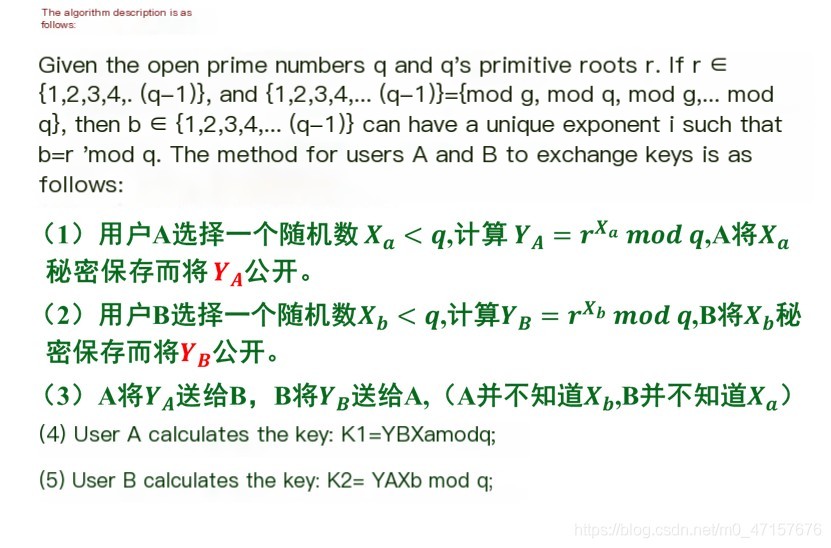

5. Description of the Diffie-Hellman Algorithm

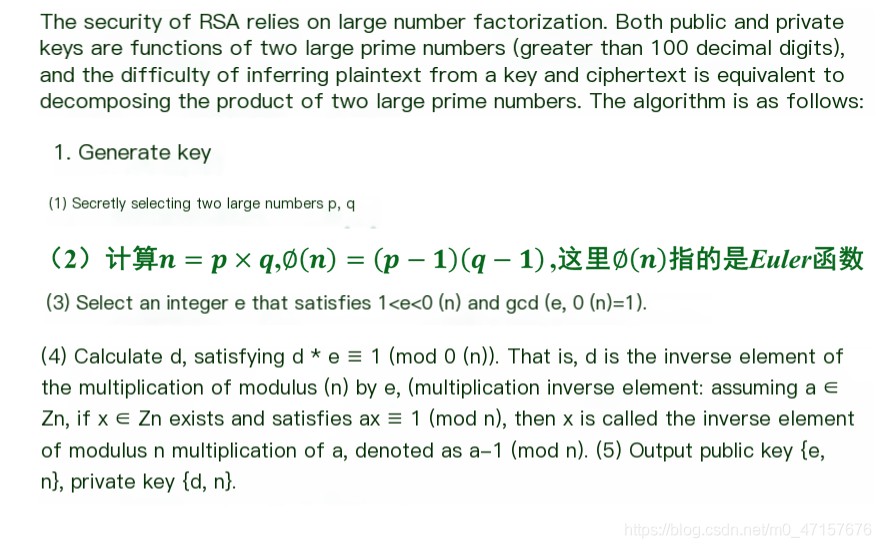

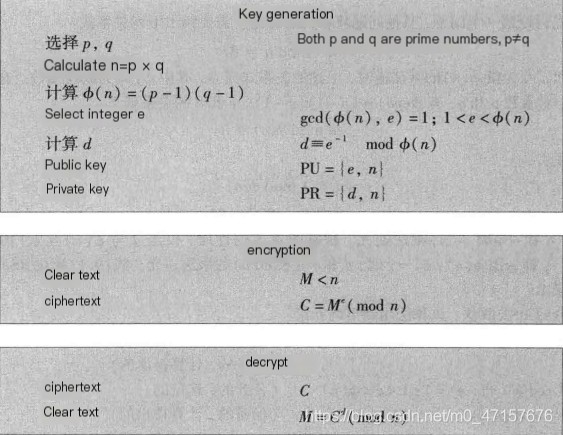

6. RSA Algorithm

- The notable RSA algorithm is based on this theory, named after its inventors: Ron Rivest, Adi Shamir, and Leonard Adleman. This algorithm provides a base method for encrypting and authenticating information on public networks. To improve confidentiality strength, RSA keys are at least 500 bits long, with a recommended size of 1024 bits, which results in a significant computational burden. To reduce computational load when transmitting information, a combination of traditional symmetric encryption methods and RSA public key encryption methods is typically used. The plaintext of the message is encrypted using improved DES or IDEA encryption methods, while RSA is employed for encrypting the key and information digest. The recipient decrypts the message with their respective key and verifies the information. However, RSA cannot replace symmetric algorithms like DES; RSA has long keys and encrypts slowly, whereas symmetric algorithms like DES encrypt quickly and are well-suited for encrypting larger messages, compensating for RSA’s drawbacks. The U.S. Privacy Enhanced Mail System (PEM) uses the combination of RSA and DES for confidential mail communications, which has become a standard for secure E-MAIL communication.

- Limited Security

RSA is a block cipher algorithm, and its security is based on the difficulty of factoring a large integer n into two prime numbers in number theory.

The only solution to RSA’s common modulus attack is to avoid sharing modulus n.

- Slow Computational Speed

RSA performs calculations with large numbers, making it 100 times slower than DES in its fastest cases, whether in software or hardware implementations. Speed has always been a disadvantage of the RSA algorithm. Generally, RSA is used for encrypting small amounts of data.

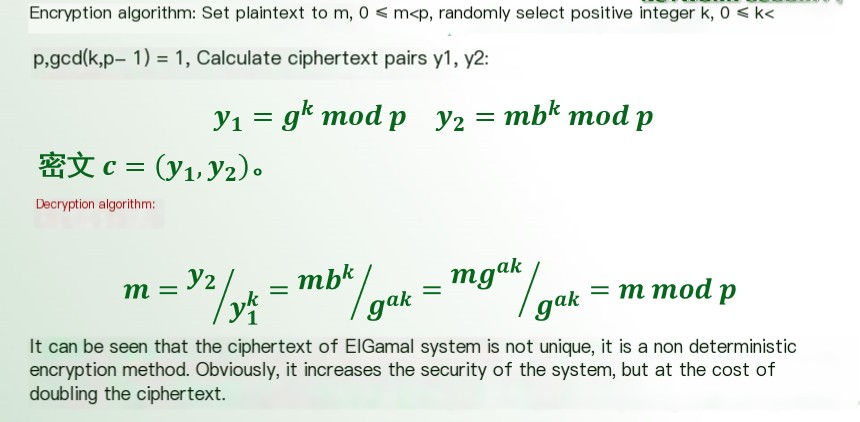

7. EIGamal Encryption Algorithm

8. Elliptic Curve Encryption Algorithm

- In 1985, Koblitz and Miller independently proposed the idea of applying elliptic curves (Elliptical Curve) to construct public key cryptosystems. This algorithm gained attention due to the advantages of elliptic-curve-based public key cryptosystems, such as small overhead (low computational demand) and high security. As the elliptic curve public key cryptosystem has developed extensively, it is set to replace RSA as the prevailing public key encryption algorithm. Practically, running the elliptic curve algorithm on a 32-bit PC and a 16-bit microprocessor results in digital signatures taking less than 500ms on the 16-bit microprocessor. Consequently, applying elliptic curve digital signatures can be easily utilized in devices with limited resources.

9. Quantum Encryption Methods

- Quantum encryption emerged alongside public key encryption standards and is applicable to encrypting information transmitted over conventional broadband data channels on networks. Its working principle involves each user generating a private random number string, with both users sending a sequence of individual photons representing the number strings to each other’s receivers (light pulses). The receivers take matching bit values from both strings to form the key, facilitating the transmission of a session or exchange key. Because the method relies on the laws of quantum mechanics, the transmitted photons cannot be eavesdropped on; any eavesdropping would interfere with the communication system and irreversibly alter the system’s quantum state, notifying both parties of eavesdropping, ending the communication, and re-generating the key. In the near future, this encryption technology is expected to find use and further development, although implementing digital signatures remains to be researched.

5. Overview of Information Encryption Products

1. PGP Encryption Software Overview

- PGP (pretty good privacy) is software for encrypting emails and transmitted documents.

2. Encryption Standard Used by PGP

- PGP utilizes a hybrid algorithm combining public key encryption and traditional encryption.

- PGP randomly generates a key for each encryption using the IDEA algorithm to encrypt plaintext and then uses a public key encryption algorithm to secure the key transmission. Generally, the key is encrypted using the RSA or Diffie-Hellman algorithm, not suitable for encrypting large amounts of data, to ensure the transmission channel’s security (key distribution implementation). In this way, the recipient also uses RSA or Diffie-Hellman to decrypt this random key and then uses IDEA to decrypt the plaintext.

3. Security Management Features of PGP

4. Introduction to CryptoAPI Encryption Software

- Microsoft CryptoAPI (Cryptography API) is a set of API standard encryption interface function functions developed by Microsoft, primarily providing security services such as encryption/decryption, digital signature verification, and other applications under the Win32 environment for use by applications. These API functions support generating and exchanging keys, encrypting and decrypting data, managing and authenticating keys, verifying digital signatures, and calculating hashes, enhancing the security and manageability of applications.

5. Structure of the CryptoAPI Encryption System

- Microsoft provides the CryptoAPI interface and CSP (cryptographic service provider), which is an independent module implementing all encryption operations. It consists of two parts: a DLL (dynamic linkable library) file and a signature file. Once installed by Microsoft, the necessary files for this CSP are placed in their respective directories, and registered in the registry.

6. CryptoAPI Functions

- Base cryptography functions: These basic encryption service functions are API functions directly programmed using CSP-provided functions, primarily conducting operations like encrypting/decrypting data, calculating hashes, and generating keys.

- Certificate and certificate store functions: These functions allow users to store, retrieve, delete, list, and verify certificates in situations where signature certificates on a computer increase over time, necessitating certificate management. While these functions provide storage and information attachment features, they do not generate certificates (certificate generation is the task of a CA) nor certificate requests. Certificate Store Functions facilitate attaching certificates to information sent.