1. Introduction

As we all know, typically when we develop a mobile application, we directly call the network request APIs provided by the system to request data from the server and then process the returned data. Alternatively, on iOS, we may use an open-source network library such as AFNetworking or OKHttp (on Android, HttpURLConnection or the open-source okhttp library can be used) to effectively manage request threads and queues, automatically perform some data parsing, and then conclude the process.

However, for applications that pursue user experience, further optimizations will be made regarding the characteristics of mobile networks, including:

1) Speed Optimization: How can the speed of network requests be further enhanced?

2) Weak Network Adaptation: Mobile network environments change frequently, often resulting in unstable and poorly usable network connections. How can requests be successfully completed as quickly as possible in such situations?

3) Security Assurance: How can we prevent interception/modification or impersonation by third parties, avoid interception by carriers, and simultaneously ensure performance is unaffected?

For browser-based frontend development, there is not much that can be done regarding the network aspect. However, for native mobile applications (in this context, ‘native’ mainly refers to iOS and Android apps), the entire network request process can be freely controlled and a great deal can be achieved. Many large apps have carried out a lot of network layer optimizations on these three issues. Some new network layer protocols like HTTP2 and QUIC have also introduced numerous optimizations in these areas. Follow my text as you both learn and organize, summarizing common optimization techniques for current mainstream mobile network short connections, hoping to provide you with some inspiration.

The content organized in this article also holds enlightening significance for mobile Instant Messaging (IM) applications since mainstream mobile IM data communication nowadays essentially boils down to a combination of long connections and short connections. Therefore, optimizing short connections may be more pronounced for IM on mobile in certain scenarios. In this aspect, WeChat is very thorough and extreme, almost reinventing a set of network layer frameworks for mobile IM (see: ” Augmented Release: The Cross-Platform Component Library Mars Used Internally by WeChat for Mobile IM Network Layer is Now Open Source“).

4. Optimization of Request Speed

The usual process for a single network request is as follows:

1) DNS resolution, requesting the DNS server to acquire the IP address corresponding to a domain name;

2) Establishing a connection with the server, including the TCP three-way handshake and security protocol synchronization process;

3) Once the connection is established, sending and receiving data and decoding data.

There are three obvious points of optimization here:

1) Directly use the IP address to eliminate the DNS resolution step;

2) Avoid re-establishing the connection for each request by reusing the connection or continuously using the same connection (persistent connection);

3) Compress data to reduce the size of transmitted data.

Let’s look at what can be done for each.

4.1 DNS Optimization

The complete DNS resolution process is lengthy, as it starts from the local system cache. If the cache is absent, it will move to the nearest DNS server; failing that, it will go to the root domain server, each layer having a cache with an expiration time to ensure real-time domain name resolution.

This DNS resolution mechanism has a few drawbacks:

1) If the cache time is set too long, domain updates are not timely; if set too short, a large number of DNS resolution requests impact request speed.

2) Domain hijacking and vulnerability to man-in-the-middle attacks or interception by carriers, resulting in domain resolution pointing to a third-party IP address. The hijacking rate is reported to reach 7%.

3) The DNS resolution process is uncontrollable, lacking assurance that it resolves to the fastest IP.

4) A single request can only resolve one domain name.

To address these issues, HTTPDNS was devised. The principle is simple: perform domain name resolution independently, using HTTP requests to the backend to retrieve the IP address corresponding to the domain name, directly resolving all the aforementioned issues.

The benefits of implementing HTTP DNS can be summarized as:

1) Separation of domain name resolution and requests, as all requests use IP addresses directly, eliminating the need for DNS resolution, with the app periodically requesting HTTPDNS servers to update IP addresses.

2) Ensuring HTTPDNS request security through mechanisms like signing to prevent hijacking.

3) User-managed DNS resolution guarantees returning the nearest IP address based on user location or using the fastest IP based on client-side speed test results.

4) A single request can resolve multiple domain names.

The remaining details will not be elaborated. Given its numerous advantages, HTTPDNS has almost become a standard feature for medium to large apps. This addresses the first issue — the time consumption of DNS resolution, while also incidentally resolving part of the security issue — DNS hijacking.

For DNS issues in mobile networks, ” Discussing Login Request Optimization for Mobile IM Development” also mentions them, for your reference.

4.2 Connection Optimization

The second issue deals with the time consumption of establishing a connection. The main idea for optimization here is connection reuse, where you don’t need to re-establish a connection every time a request is made. Efficient connection reuse can arguably be seen as the primary focal point in optimizing network request speed, with ongoing advancements worth understanding.

▼【keep-alive】:

HTTP protocol has keep-alive, enabled by default in HTTP1.1, which somewhat alleviates the time cost of establishing a new connection with every request through the TCP three-way handshake. The principle is not to immediately release the connection once the request is complete but to place it in a connection pool from which connections are directly used for other requests if they have the same domain and port, eliminating the time cost of establishing connections.

Both clients and browsers currently default to keeping keep-alive enabled, so that each request does not re-establish a connection for the same domain. Purely short connections no longer exist. However, this keep-alive connection can only send or receive one request at a time, and cannot accept new requests until the previous one is completed. There are two scenarios when multiple requests are made simultaneously:

If requests are sent sequentially, the same connection can be reused indefinitely, although the speed would be slow, as each request must wait for the previous one to complete before being sent.

If requests are sent in parallel, each would require a new connection for the initial request involving the TCP three-way handshake, and the second time connections in the pool can be reused. However, if the connections maintained in the pool are too many, it results in a substantial waste of server resources. If the number of maintained connections is limited, requests exceeding this limit will still require new connections each time.

To solve this problem, the new generation HTTP2 protocol introduces multiplexing.

▼【Multiplexing】:

The multiplexing mechanism of HTTP2 makes simultaneous handling of multiple requests on a single connection possible, solving the problem of multiple concurrent requests that brought about the need for multiple connections as described above.

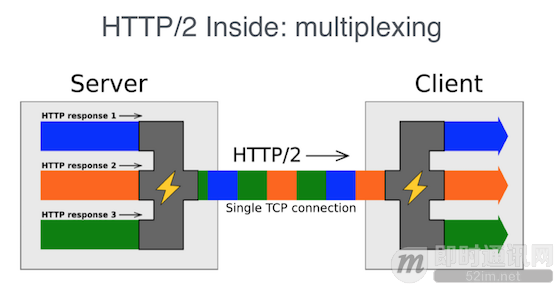

There is a famous diagram on the Internet that vividly illustrates this process:

In HTTP1.1 protocol, data within a connection is transmitted in serial order. The next request cannot be processed until the previous request is completed, resulting in these requests not utilizing the full bandwidth during transmission. Even HTTP1.1’s pipelining allows multiple request sends in tandem; however, responses still return in serialized order, resulting in blocking if one of the requested responses is slightly larger or encounters an error.

HTTP2’s multiplexing protocol addresses these issues by encapsulating transmitted data in connection within unitary streams, each identified individually. Because streams are transmitted and received out of order and do not depend on order, blocking issues are avoided. The receiver discerns streams based on identifiers, performing data assembly to obtain the end data result.

Explaining the term multiplexing: ‘multi’ implies multiple connections or operations, and ‘plexing’ or ‘reuse’ is literal – reuse of a single connection or thread. In the context of HTTP2, it refers to the reuse of connections, while the relevant network association has a multiplexing I/O technology (select/epoll), which allows multiple network requests return data within the same thread via event-driven ways.

For mobile clients, iOS from version 9 and upwards has native support for HTTP2 as long as the backend supports it. On Android, the open-source network library okhttp3 and above supports HTTP2. Some large domestic apps build their network layer to support HTTP2’s multiplexing, circumvent system restrictions and add specific features based on their business needs, such as WeChat’s open-source network library, Mars (see: ” Augmented Release: The Cross-Platform Component Library Mars Used Internally by WeChat for Mobile IM Network Layer is Now Open Source“). Most requests on WeChat are processed through a single long connection, with characteristics of multiplexing essentially consistent with HTTP2.

▼【TCP Head-of-Line Blocking】:

Although HTTP2’s multiplexing appears to be a perfect solution, a problem persists: head-of-line blocking, restricted by the TCP protocol. To ensure data reliability, if a TCP packet is lost during transmission, the rest of the packets await retransmission before further processing. All requests on HTTP2’s multiplexing are executed on the same connection, meaning a single packet loss causes restocking and ultimately impedes all requests.

Without changing the TCP protocol, optimization is impossible. However, improvements on TCP protocol orientation integrated into system implementations and specific hardware tailorings progress slowly. Hence, GOOGLE proposed the QUIC protocol (see: ” Technical Recap: The Next Generation Low-Latency Network Transmission Layer Protocol Based on UDP — QUIC Explained“), effectively defining a reliable transmission protocol over UDP to address some defects of TCP, including head-of-line blocking. There are many online resources available for exploring the specifics of these solutions.

QUIC exists at its nascent stage with limited client adaptation. The primary advantage of the QUIC protocol over HTTP2 is its resolution of TCP head-of-line blocking. Other optimizations such as secure handshake 0RTT / certificate compression have been parallelly developed in TLS1.3 and can be utilized in HTTP2, not exclusive features of QUIC. The impact of TCP head-of-line blocking on HTTP2 performance and how much improvement QUIC can offer remains to be seen (for further insight, check ” Accelerating the Internet: Next Generation QUIC Protocol’s Technical Practice Sharing at Tencent“).

PS: Refer to more articles related to the next-generation QUIC protocol:

” Technical Recap: The Next Generation Low-Latency Network Transmission Layer Protocol Based on UDP — QUIC Explained“

” Accelerating the Internet: Next Generation QUIC Protocol’s Technical Practice Sharing at Tencent“

” Qiniu Cloud’s Technical Sharing: Implementing Real-Time Video Streaming Without Stuttering Using the QUIC Protocol“

4.3 Data Compression Optimization

The third issue involves the size of transmitted data, wherein the impact of data on request speed boils down to two factors: compression rate and the speed of decompression serialization and deserialization. The most popular data formats today are json and protobuf. JSON is a string, whereas protobuf is binary. Even using various compression algorithms, protobuf consistently remains smaller, boasting advantages in data volume and serialization speed. This two-pronged comparison won’t be elaborated (for detailed insight into protobuf, see: ” A Detailed Explanation of the Protobuf Communication Protocol: Code Demonstrations, Detailed Principle Introductions, etc.,” ” Omni-Directional Evaluation: Is Protobuf Really 5 Times Faster Than JSON?,” ” Strongly Suggest Using Protobuf as Your Instant Messaging Application’s Data Transmission Format“).

Compression algorithms are diverse, proving continually evolving, with the latest Brotli and Z-standard achieving higher compression rates. Z-standard can train a suitable dictionary according to business data samples, further increasing compression rate by executing with today’s best-performing algorithm.

Aside from the body data transmission, data in each HTTP request’s protocol header is significant and not to be ignored. HTTP2 incorporates HTTP header compression, where most headers are repetitive data. Fixed fields, i.e., method, use a static dictionary, whereas dynamic dictionaries cater to non-fixed but repetitive fields such as cookies, attaining effective compression rates. Here you can find a detailed introduction: ” HTTP/2 Header Compression Technology Overview” (The author conducted extensive HTTP2 research, with more articles available in the author’s HTTP2 Technical Series for deeper learning).

Through HTTPDNS, connection multiplexing, and superior data compression algorithms, network request speed can be effectively optimized to an impressive level. Next, let’s explore what can be done regarding weak network and security issues.

5. Optimization for Weak Mobile Networks

Mobile networks often experience instability, and WeChat has shared substantial practices and insights regarding weak network optimization, including:

1) Improve Connection Success Rate:

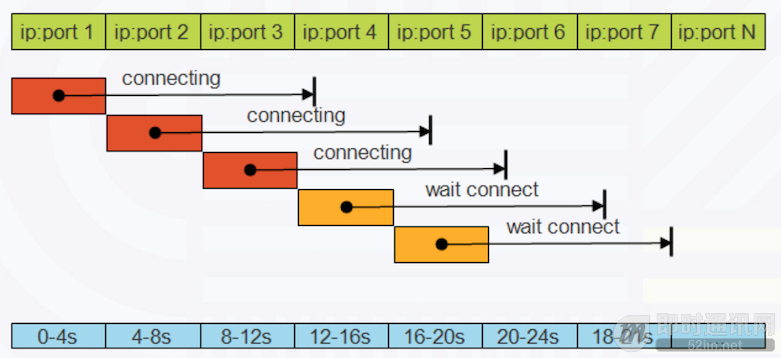

Composite connection, staggering concurrent connections in a ladder-like manner during connection establishment. Once one connection succeeds, other connections close. This scheme combines the advantages of serial and concurrent connections, enhancing weak network connection success rates without intensifying server resource consumption, as shown below:

2) Determine the Optimal Timeout:

Develop different strategies for computing total read-write timeout (from request to response timeout), first packet timeout, and packet-to-packet timeout (between two segments) to expedite timeout judgment, minimize wait time, and facilitate prompt retries. Timeout settings can be dynamically adjusted according to network status,.

3) Optimize TCP parameters and implement TCP optimization algorithms:

Fine-tune the server’s TCP protocol parameters and enable optimization algorithms suitable for the characteristics of the business and mobile network environments, including the initial RTO values, mixed slow-start, TLP, F-RTO, etc.

These detailed weak network optimizations have not become standardized, meaning that system network libraries lack built-in support. However, the first two client-side optimizations are implemented in WeChat’s open-source network library, Mars, which you may utilize if desired (see: ” Augmented Release: The Cross-Platform Component Library Mars Used Internally by WeChat for Mobile IM Network Layer is Now Open Source“).

6. Security Aspects

Standard protocol TLS ensures the security of network transmission, with SSL as its predecessor. It continues evolving, with TLS1.3 being the latest. The common HTTPS is essentially HTTP topped with the TLS security protocol.

Security protocols broadly address two issues:

1) Ensure security;

2) Reduce encryption costs.

In terms of ensuring security:

1) Encrypt transmission data using encryption algorithm combinations to prevent interception and tampering;

2) Authenticate the counterparty’s identity to avoid impersonation;

3) Maintain flexibility in updating encryption algorithms, allowing algorithm replacement if a solution is compromised, disabling hacked algorithms.

To reduce encryption costs:

1) Use symmetric encryption algorithms to encrypt transmission data, addressing performance shortcomings and length restrictions of asymmetric encryption algorithms;

2) Cache security protocol handshake data like keys and others to expedite the second connection establishment;

3) Accelerate the handshake process: 2RTT-> 0RTT. The idea behind accelerating the handshake is that instead of waiting until algorithm negotiation completion between the client and server before encrypting data, utilize pre-built public keys and default algorithms, allowing data transmission during handshake, bypassing wait – termed as 0RTT.

These points encapsulate a wealth of details. There exists a comprehensive article introducing TLS, offering detailed information — highly recommended: ” TLS Protocol Analysis and Design of Modern Encrypted Communication Protocols” (Note from JackJiang: This article is extraordinarily lengthy; I hope you can read it patiently ^_^).

Currently, major mainstream systems support TLS1.2, with iOS’s network library defaulting to using TLS1.2 and Android versions 4.4 and above supporting 1.2. However, iOS is still in the testing stage for TLS1.3, with no information found regarding Android. For regular apps, TLS1.2 already ensures transmission security if certificates are correctly configured, although connection speed might suffer. Some large apps like WeChat have independently implemented part of the TLS1.3 protocol to achieve early full-platform support (Team WeChat specifically shared an article on TLS1.3 practice — see: ” WeChat’s Next-Generation Communication Security Solution: Detailed Description of MMTLS Based on TLS1.3“).

7. Concluding Thoughts

Mobile network optimization is a vast subject. This article merely enumerates commonly adopted optimization points in the industry from a learning perspective in terms of optimization ideas. Many deeper details and more in-depth optimizations remain unexplored. Lacking practical development experience in the network layer is acknowledged, and any error corrections are welcome.