Background

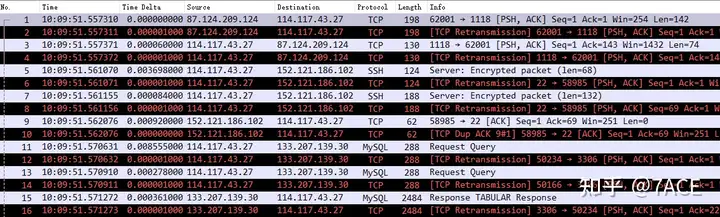

This article explores the troubleshooting process for TCP retransmission issues encountered on a Linux server used in a business system. An R&D colleague reported frequent TCP retransmissions across various types of communication, including business data, SSH, and SFTP traffic. By analyzing the network trace files, we pinpoint the underlying causes of the retransmissions and outline effective solutions to address these network challenges.

The first reaction is of course impossible. If this is the case, there must be problems with the business long ago. But I can’t be too sure. After all, face-slapping incidents are still possible. What if?

Problem Analysis

Since we saw the packet retransmission phenomenon, from the perspective of packet analysis, we naturally need to see it with our own eyes. We further communicated some basic information. The person in charge of the business system provided some end-to-end IP communication pairs, and learned that the business system was deployed in multiple data centers and multiple machines had the same problem.

At this point, I felt relieved. No matter what, it definitely had nothing to do with the Internet, otherwise the company’s business would have collapsed long ago.

But the phenomenon of data packet retransmission still exists. Since it exists, we still need to give a reasonable explanation for the cause of the problem. Only by looking at the packets specifically can we understand the problem clearly.

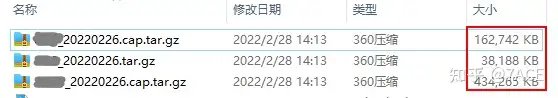

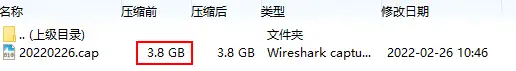

The trace files of the three servers are as follows. First of all, the file size is too large. The largest file is 3.8G before compression, and the compressed 434M is already very difficult for Wireshark to parse. Load and reload~

Packet Capture

From my personal experience, I would say that if you can avoid capture filtering, don’t do it. The reason is that unless there is a performance issue or you are 120% proficient in the protocol, don’t use capture filters. The performance issue here is that the trace file is too large, Wireshark can’t load and analyze it well, and it will be loaded and loaded every time.

By capinfos looking at the information of the largest trace file, the file size is about 4GB, the duration is 37 minutes, and 4.7M data packets are captured. No wonder ~ it is not big…

$ capinfos 20220226.cap

File name: 20220226.cap

File type: Wireshark/tcpdump/... - pcap

File encapsulation: Linux cooked-mode capture v1

File timestamp precision: microseconds (6)

Packet size limit: file hdr: 262144 bytes

Number of packets: 4770 k

File size: 4097 MB

Data size: 3944 MB

Capture duration: 2220.841404 seconds

First packet time: 2022-02-26 10:09:51.557310

Last packet time: 2022-02-26 10:46:52.398714

Data byte rate: 1776 kBps

Data bit rate: 14 Mbps

Average packet size: 826.73 bytes

Average packet rate: 2148 packets/s

SHA256: 5271fd07c06415eb5ced163bd8365ca8d24855368e7adea9105ad009c683d76f

RIPEMD160: 45a8177d1de968bf22edf66fda39a8bc9baab736

SHA1: 33457cb2fd4d4c15248101e2c7d345878e70b64c

Strict time order: False

Number of interfaces in file: 1

Interface #0 info:

Encapsulation = Linux cooked-mode capture v1 (25 - linux-sll)

Capture length = 262144

Time precision = microseconds (6)

Time ticks per second = 1000000

Number of stat entries = 0

Number of packets = 4770992

Packet Analysis

It is impossible to directly open such a large trace file for further analysis. Considering the problem phenomenon reported by the person in charge of the business system, each TCP packet has retransmission, so we can use editcap 1000 data packets for simple analysis.

$ editcap -r 20220226.cap test.pcapng 1-1000

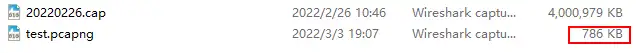

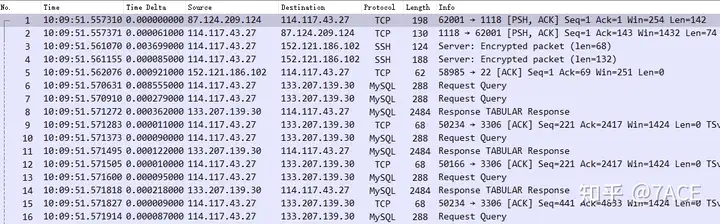

The size of the processed test trace file is only 786 KB. In fact, you can already know what the problem is after opening the file. The trace file data packets are repeated.

The reason is actually capinfos indicated in the information, Linux cooked-mode capture v1 (25 – linux-sll), which means that the interface captured by tcpdump is any, which means there are duplicate network cards. After further inquiry with the person in charge of the business system, it was found that the command actually run was tcpdump -i any xxx, and the server network card was bonded, which means that the physical network card captured a data packet, and the logical network card bond also captured the same data packet, so naturally it would be displayed as a large number of duplicates in Wireshark.

$ capinfos 20220226.cap

Interface #0 info:

Encapsulation = Linux cooked-mode capture v1 (25 - linux-sll)

Capture length = 262144

Time precision = microseconds (6)

Time ticks per second = 1000000

Number of stat entries = 0

Number of packets = 4770992How to remove duplicates? We still use to achieve the goal. We can see that there are 517 duplicate packets editcap in the original file test.pcapng out of 1000 packets. After removing duplicates, there are 483 packets left in test1.pcapng.

$ editcap -d test.pcapng test1.pcapng

1000 packets seen, 517 packets skipped with duplicate window of 5 packets.

$ capinfos -c test1.pcapng

File name: test1.pcapng

Number of packets: 483After opening the trace file again, the data packets are normal, and naturally the actual business is also normal.

Summary of the problem

By adopting the correct capture method, making good use of CLI tools, and adding reasonable judgment and analysis, the problem will naturally be solved.