1. Problem Background

This case still comes from a friend’s sharing. The actual case isn’t complicated; it’s primarily a retransmission issue caused by packet loss on the Internet connection. However, the initial assessment based solely on the packet screenshots and the final analysis of the actual packets did not yield the same conclusion, leading to a bit of a misdirection, which is why I’m documenting this article.

2. Problem Information

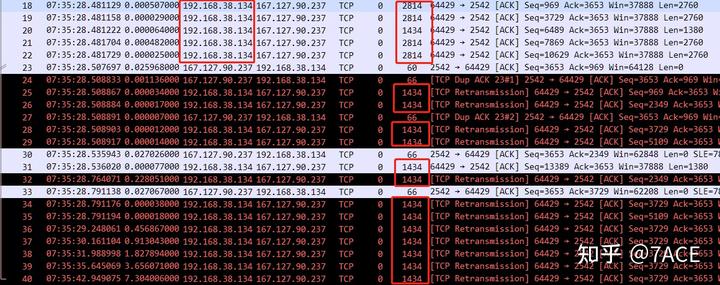

The initial packet screenshot looks something like this: a bunch of timeout retransmissions. What’s the issue? For those unfamiliar with it, the immediate assumption might be that it’s just packet loss. For someone with a bit more experience, it might seem like a common MTU (Maximum Transmission Unit) issue on the Internet. The client sent a packet with a length of 2760 bytes, which equals 2 MSS (Maximum Segment Size) of 1380 bytes. During the transmission process, it encountered an MTU problem, resulting in the 1380-byte packets being discarded and, consequently, many timeout retransmissions.

Here’s the translation of your content in American English while maintaining the original paragraph structure:

Based on the above screenshot, the initial conclusion was that the problem lay with the Internet connection, specifically an MTU issue. However, unexpected things can happen. When I received the actual packets and analyzed them closely, I found that while there was still packet loss occurring on the Internet connection, the root cause was not MTU-related, meaning the initial conclusion was incorrect. The main information from the packet trace file is as follows:

λ capinfos 1220.pcapng

File name: 1220.pcapng

File type: Wireshark/... - pcapng

File encapsulation: Ethernet

File timestamp precision: microseconds (6)

Packet size limit: file hdr: (not set)

Packet size limit: inferred: 54 bytes

Number of packets: 40

File size: 4264 bytes

Data size: 37 kB

Capture duration: 14.583142 seconds

First packet time: 2023-12-18 07:35:28.365933

Last packet time: 2023-12-18 07:35:42.949075

Data byte rate: 2553 bytes/s

Data bit rate: 20 kbps

Average packet size: 931.10 bytes

Average packet rate: 2 packets/s

SHA256: 096e0919a13f8ff2c0b8b4691ff638c14345df621ecd9157daa3b3ce3447b88c

SHA1: 3267274c4fce576dde3446d001372b9b34f15717

Strict time order: True

Capture application: Editcap (Wireshark) 4.2.0 (v4.2.0-0-g54eedfc63953)

Capture comment: Sanitized by TraceWrangler v0.6.8 build 949

Number of interfaces in file: 1

Interface #0 info:

Encapsulation = Ethernet (1 - ether)

Capture length = 262144

Time precision = microseconds (6)

Time ticks per second = 1000000

Time resolution = 0x06

Number of stat entries = 0

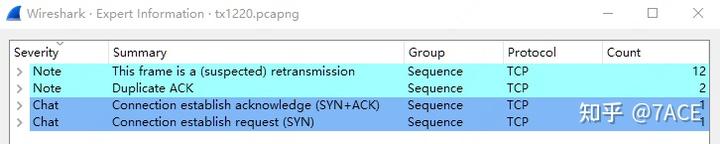

Number of packets = 40The client captured the packets using Wireshark for a duration of 14.58 seconds, resulting in a total of 40 packets and a file size of 4.264 bytes, with an average rate of about 20 kbps. Overall, the transmission rate is quite low. The packets were processed by Editcap and TraceWrangler, which altered the file format and anonymized important data. For an introduction to the TraceWrangler anonymization software, you can refer to my previous article, “Wireshark Tips and Tricks | How to Anonymize Packets.” Based on the expert information, out of the total of 40 packets, there were 12 suspected retransmissions, indicating the poor quality of this TCP connection.

3. Analyze problem

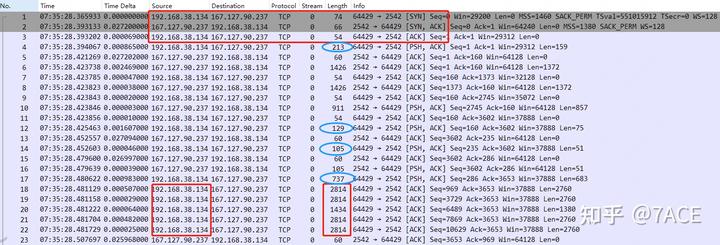

First, regarding the TCP three-way handshake, the Maximum Segment Size (MSS) advertised by the client and server are 1460 and 1380, respectively. The smaller value is taken, resulting in a maximum TCP segment size of 1380 for the transmission. Additionally, the Initial Round-Trip Time (IRTT) is 0.027269 seconds, with support for both SACK (Selective Acknowledgment) and WS (Window Scale).

The client at IP address 192.168.38.134 sent data packets, and prior to encountering issues with packet number 18 (Len 2760), the packet lengths were still small segments of 213, 129, 105, and 737 bytes. This can easily give the impression that after packet number 18, the MSS of 1380 caused packet loss and retransmissions due to an MTU issue.

We can categorize the packets into three segments: packets 1 to 3 represent the TCP three-way handshake, packets 4 to 16 involve normal data exchange, and packets 17 and onward are the points of interest that warrant closer analysis.

The entire analysis process is as follows:

- Packets No. 17 to 22 consist of data segments sent by the client. Except for packet No. 17, which has a length of 683 (a smaller segment), the others are either 1 or 2 MSS (Maximum Segment Size) of 1380.

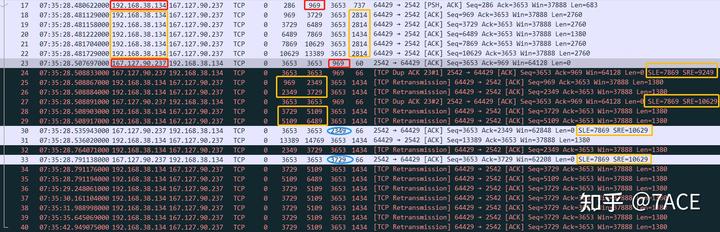

- After one round-trip time (RTT), the server returned ACK No. 23 with an acknowledgment number of 969, which only confirmed packet No. 17. The subsequent packet, No. 24, was identified as a TCP Duplicate Acknowledgment (Dup ACK) since its acknowledgment number remained 969 and carried SACK (Selective Acknowledgment) information with SLE (Start of List) at 7869 and SRE (End of List) at 9249. This is critical as it indicates that the server confirmed receipt of data sent by the client with sequence number 969 and the data between sequence numbers 7869 and 9249. This means the server did receive a data segment of size 1380 (from 9249 to 7869), which contradicts the initial conclusion that all MSS 1380-sized packets were discarded due to exceeding the MTU (Maximum Transmission Unit) size on the intermediate path.

- However, the issue still lies in the fact that packet loss occurred in the intermediate internet line. Which data segments were lost? The data between sequence numbers 969 and 7869 for packets No. 18 to 20. Since the client received SACK No. 24, it quickly retransmitted packets No. 25-26 and No. 28-29. Considering the reduced congestion window, it only retransmitted data from sequence number 969 to 6489, while the data from sequence numbers 6489 to 7869 was not sent. During this period, the client also received SACK No. 27, confirming receipt of another data segment of size 1380 (from sequence number 9249 to 10629).

- Unfortunately, after one RTT, the server returned SACK No. 30, which only confirmed the data for sequence number 2349. This means that compared to before, it only confirmed receipt of one additional MSS 1380 data segment, and the SLE and SRE values showed no changes, indicating that the previously retransmitted packets had also experienced loss.

- At this point, the congestion window continued to decrease. The client sent only one new data segment, No. 31, and retransmitted packet No. 32 with sequence number 2349, since SACK No. 30 indicated that data between sequence numbers 2349 and 7869 was missing.

- The server’s response, SACK No. 33, continued to confirm only one additional MSS 1380-sized data segment, with an acknowledgment number of 3729. The client then retransmitted packets No. 34-35.

- Subsequently, for packets No. 34 to No. 40, the client initiated multiple timeout retransmissions, with timeout intervals evidently doubling each time. At this point, regardless of whether the intermediate internet line continued to experience packet loss or the server had released connections due to prolonged waiting, there were no further acknowledgments returned.

4. Conclusion

Throughout this analysis, the presence of SLE and SRE caused the initial judgment of the root cause to shift away from an MTU problem. Ultimately, feedback from my friend confirmed that the reason for the application transmission packet loss was indeed due to the carrier’s line experiencing packet loss, aligning with the observed packet phenomena (the lost data segments displayed no discernible pattern). After all, packets never lie.