Running Mode

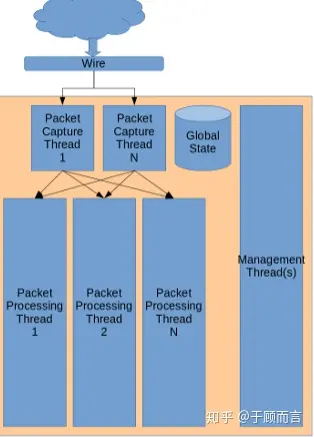

Suricata is composed of threads and queues. Data packets are transferred between threads through queues. Each thread consists of multiple thread modules, with each module implementing a specific function.

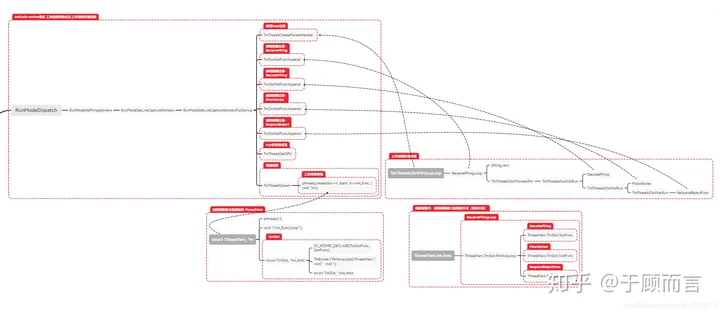

Suricata has various running modes associated with capture drivers and IDS/IPS selection. Capture drivers include: pcap, pcap file, nfqueue, ipfw, dpdk, or a specific capture driver. Suricata can only select one running mode at startup. For example, the -i option indicates pcap, -r indicates pcapfile, -q indicates nfqueue, etc. Each mode initializes certain threads, queues, etc. The specific tasks of the mode are completed by the thread modules. Depending on the organization of threads and modules, running modes can be viewed using ./suricata –list-runmodes which are further divided into “autofp”, “single”, “workers”.

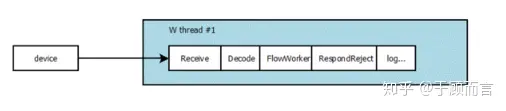

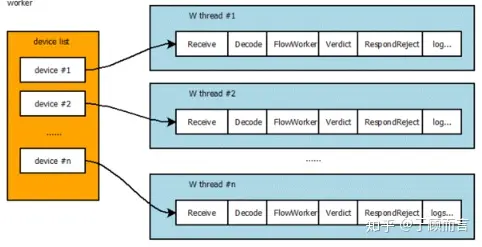

Generally, workers perform the best because the NIC driver ensures that packets are evenly distributed to the Suricata processing threads, with each thread containing a complete pipeline for packet processing.

- Single mode

- Workers

The thread module is an abstraction of the packet processing task. The following are the main types of thread modules:

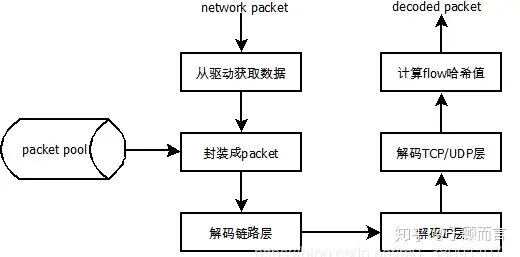

1. Receive module:

Collects network packets, encapsulates them into Packet objects, and then passes them to the Decode thread module.

2. Decode module:

Decodes the Packet according to the 4-layer protocol model (data link layer, network layer, transport layer, application layer), extracts protocol and payload information, and passes the Packet to the FlowWorker thread module after decoding. This module mainly decodes packets and does not handle the application layer. The application layer is handled by a dedicated application layer decoding module.

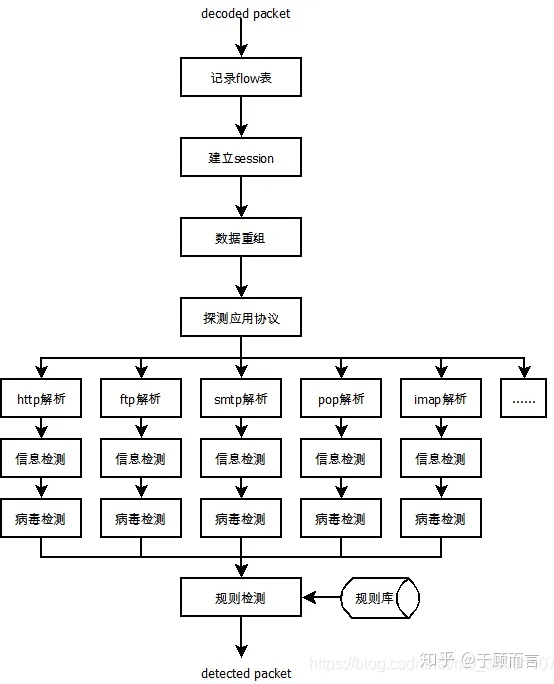

3. FlowWorker module:

Allocates flows for packets, manages TCP sessions, performs TCP reassembly, and processes application layer data for detection rule checking.

4. Verdict module:

Handles the dropping of packets marked as drop based on the Detect module's results.

5. RespondReject module:

Sends reset packets to both ends for rejected packets based on detection results.

6. Logs module:

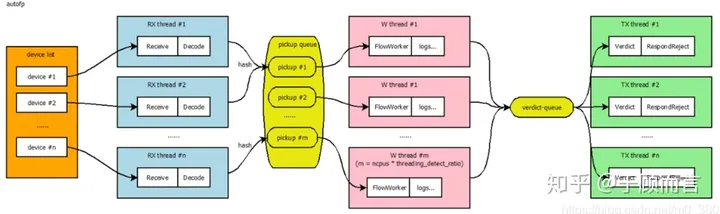

Records processing results in the logs.- Autofp (default running mode) For processing PCAP files, or in certain IPS setups (like NFQ), autofp increases multiple packet capturing and decoding threads:

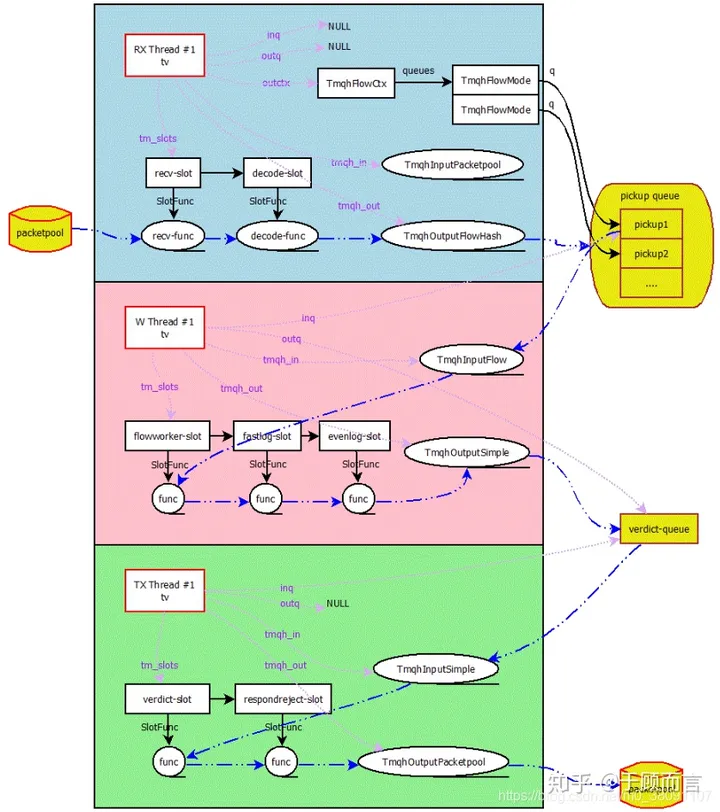

- RX thread The RX thread’s function is demonstrated by the function TmThreadsSlotPktAcqLoop, mainly executing tasks such as:

1. Setting a meaningful name for the thread;

2. Binding the thread to a specific CPU core;

3. Creating a Packet object pool for quickly storing network packet data;

4. Initializing the thread modules associated with this thread;

5. Invoking the packet collection module, starting packet collection, processing packets in sequence through each module, and placing packets in the output queue;

6. If a termination signal is received, stopping packet collection, destroying the Packet object pool, and calling the thread module's exit cleanup function;

7. Exiting thread execution.

- W thread The W thread’s function is illustrated by the function TmThreadsSlotVar, mainly executing tasks such as:

1. Setting a meaningful name for the thread;

2. Binding the thread to a specific CPU core;

3. Creating an empty Packet object pool;

4. Initializing the thread modules associated with this thread;

5. Retrieving packets from the input queue, sequentially passing them through thread modules for processing, and placing them in the output queue;

6. If a termination signal is received, stopping packet collection, destroying the Packet object pool, and calling the thread module's exit cleanup function;

7. Exiting thread execution.

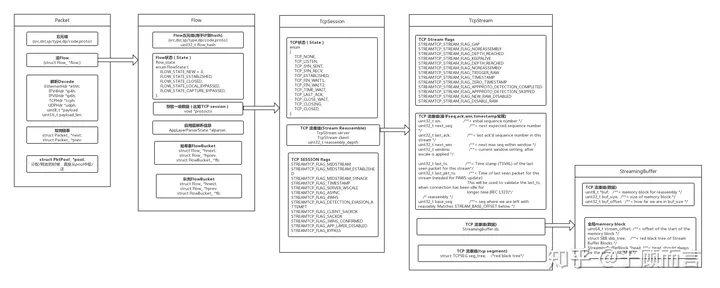

Data Structure

- Row-level Locks

Suricata, addressing Snort’s single-threaded packet processing’s inability to fully utilize multi-core CPUs, developed a multi-threaded architecture for concurrent packet processing. Many data are shared among threads, so row-level locks, hash tables, and other efficient data structures are extensively used. The first row lock is used in the connection management module (hash table with retrieval speed O(1)).

typedef struct FlowBucket_ {

Flow *head; /* List head */

Flow *tail;/* List tail */

/* Row lock type */

#ifdef FBLOCK_MUTEX

SCMutex m;

#elif defined FBLOCK_SPIN

SCSpinlock s;

#else

#error Enable FBLOCK_SPIN or FBLOCK_MUTEX

#endif

} __attribute__((aligned(CLS))) FlowBucket;

typedef struct Flow_

{

...

/* Node locks for higher concurrency */

#ifdef FLOWLOCK_RWLOCK

SCRWLock r;

#elif defined FLOWLOCK_MUTEX

SCMutex m;

#else

#error Enable FLOWLOCK_RWLOCK or FLOWLOCK_MUTEX

#endif

...

/* Formed by hnext and hprev as a doubly linked list */

/** Hash list pointers protected by fb->s */

struct Flow_ *hnext; /* Hash list */

struct Flow_ *hprev;

...

} Flow;

- nf_conntrack_lock

This lock protects the global session table. When the CPU attempts to match a session using the current packet skb or is ready to insert a new session, it needs to lock the nf_conntrack_lock.

Data Flow Chart

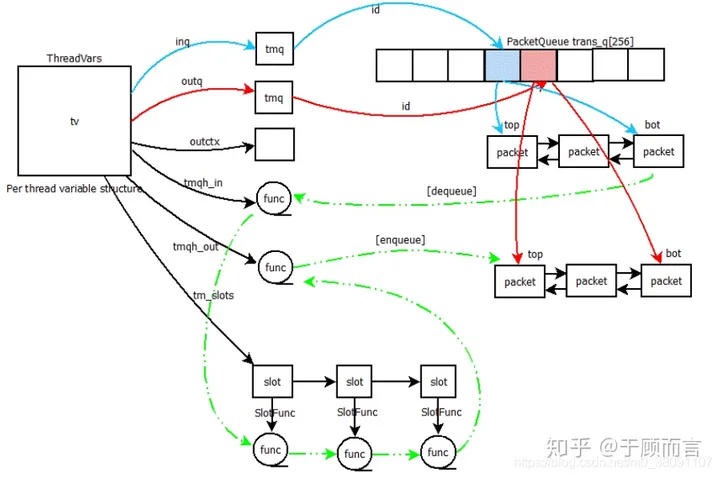

- Data Transfer Between Thread Modules

Within the same thread, data is mainly transferred between modules as parameters. Different threads use shared queues for data transfer. Each thread is abstracted by a ThreadVars structure, which specifies the input data queue inq and output data queue outq for the thread. These queues are shared among multiple threads, where one thread’s output queue could be another thread’s input queue.

- Packet Transfer Path in Autofp Mode